By: Yohai Schweiger

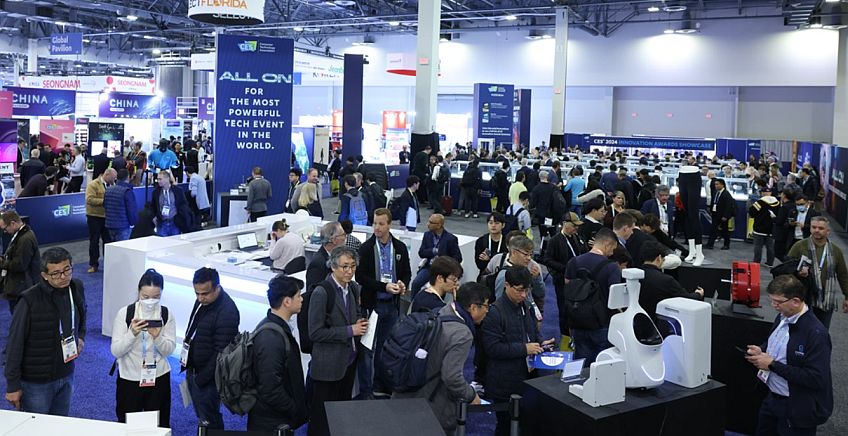

CES 2026 opens this week in Las Vegas, and once again the Israeli presence is defined less by the number of companies and more by the direction they represent. From LiDAR moving behind the vehicle windshield, through neuromorphic chips that push AI directly into sensors, to AI agents that require enterprise-grade governance layers, alongside robots, sensing systems and digital health solutions already operating in the field, a clear pattern emerges. For Israeli companies, CES is becoming less of a stage for flashy demos and more a showcase for technologies designed to integrate into real-world systems, with demands for reliability, safety and scalability. Artificial intelligence is no longer the headline act, but a component embedded inside products and operational processes. The following ten Israeli companies offer a clear snapshot of this shift, from technological promise to deployable solutions.

Innoviz: Change the location, change the game – LiDAR moves behind the windshield

Innoviz arrives at CES with the first public unveiling of InnovizThree, a LiDAR sensor that aims to change not only performance metrics but the very role of LiDAR within the vehicle sensing stack. The key innovation is not incremental gains in range or resolution, but the ability to install the sensor behind the front windshield, inside the passenger cabin. For automakers, this location is considered strategically critical, offering better protection, simpler installation and maintenance, and cleaner integration into vehicle architecture. The move goes to the heart of the long-running debate around LiDAR, positioning it not as a controversial external add-on, but as an integral sensor alongside cameras and radar. Beyond automotive use, Innoviz signals that InnovizThree is also targeting additional markets such as humanoid robots, drones and physical AI systems, where compact, reliable and energy-efficient 3D sensing is required. Unveiling the sensor at CES is no coincidence, as the show has become a central platform for automotive technology innovation and a key meeting point between technology suppliers and automakers seeking mature solutions for next-generation sensing and autonomous driving systems.

Carteav: Modest autonomy that works today

Carteav approaches autonomous driving from a very different angle than what is often presented at technology exhibitions. While CES frequently serves as a stage for prototypes and long-term visions, Carteav arrives with a clearly defined and deployable solution for low-speed autonomous mobility in controlled environments. At CES, the company will showcase its transportation platform built around small electric autonomous vehicles and smart fleet management, designed for campuses, resorts, parks and gated communities. Rather than tackling the full complexity of autonomous driving on public roads, Carteav focuses on environments where autonomy can be deployed today, with lower regulatory and safety barriers. This pragmatic approach places the company within a broader trend of applied autonomy, favoring functional, near-term solutions over impressive but distant demonstrations, and highlighting how AI and fleet management can already deliver tangible operational value.

POLYN Technology: Neuromorphic intelligence directly on the sensor

POLYN is making its first appearance at CES with its edge AI technology based on analog neuromorphic chips. Founded in 2019, the company is developing an approach that enables intelligent processing directly on the sensor, before data is sent to a central processor or the cloud. The technology targets applications where ultra-low power consumption and fast response times are critical, including medical devices, wearables, industrial robotics and large-scale IoT systems. POLYN’s innovation goes beyond pushing computation to the edge; it redefines the role of the sensor itself. Instead of acting as a passive data source, the company’s neuromorphic approach turns the sensor into an active computational component capable of filtering, recognition and early decision-making at the hardware level. Presenting this technology at CES, rather than at an academic conference, signals a transition toward commercial readiness, positioning POLYN as an alternative to power-hungry digital accelerator-based AI models at a time when the market is seeking more efficient and scalable solutions for physical AI.

Avon AI: Managing AI agents as an organizational challenge

Avon AI is a very young Israeli startup operating in one of the fastest-emerging areas of artificial intelligence: the management and deployment of AI agents within enterprises. At CES, the company will present a platform designed to govern, monitor and operationalize intelligent agents in organizational environments, at a moment when many enterprises are moving from experimenting with models and chatbots to deploying AI systems that perform actions, make decisions and interact with sensitive data. Rather than building another model or conversational interface, Avon AI focuses on the control layer: who operates the agent, which systems it can access, what it is allowed to do, and how unexpected behavior can be monitored and mitigated over time. The platform enables organizations to treat AI agents as digital employees, with transparency, measurement and continuous improvement, rather than experimental code owned solely by development teams. The company’s debut at CES aligns with the expectation that AI agents will be one of the show’s dominant themes, reflecting AI’s broader shift from demos to enterprise production environments, where reliability, governance and compliance become baseline requirements.

bananaz AI: Artificial intelligence for mechanical engineers

While Avon AI addresses AI agents at the enterprise level, bananaz AI focuses on a very different audience: mechanical engineers and product development teams. At CES, the company will unveil its Design Agent for the first time, an AI agent that integrates directly into engineering workflows, reads CAD files and technical drawings, and helps identify design issues, compliance risks and manufacturing challenges early in the development cycle. Unlike general-purpose AI tools, bananaz’s agent is built on deep domain knowledge of mechanical engineering and the relationships between geometry, materials, manufacturing constraints and safety requirements. Showcasing the solution at CES, a consumer-oriented event that has increasingly become a platform for the entire product development pipeline, underscores the link between engineering tools and the physical products that ultimately reach consumers. For bananaz, this marks its first global exposure; for the market, it illustrates how AI agents are moving into the core of industrial design and engineering, not just customer service, marketing or analytics.

iRomaScents: Digital experiences with a sense of smell

iRomaScents is an Israeli startup operating in a less conventional corner of technology and user experience. The company has developed a smart digital scent delivery system that adds an additional sensory layer to content and consumer experiences. At CES, iRomaScents will present an advanced version of its solution, having already appeared at the show in previous years, with this year’s focus on deeper integration between scent, digital content and interactive experiences. The compact system, based on digitally controlled scent capsules, enables precise control over timing, intensity and personalization, allowing scent to become a natural part of the experience rather than a novelty effect. The company’s approach positions smell as a functional interface layer alongside screen and audio, opening the door to applications in consumer products, retail and home entertainment. Its return to CES with a stronger emphasis on commercial use cases reflects a broader shift from experimental multisensory experiences toward practical, scalable applications.

Validit AI: Real-time identity and intent verification

Validit AI is an Israeli startup operating at the intersection of cybersecurity, artificial intelligence and behavioral analysis. At CES, the company will present its platform for real-time identity verification and intent analysis, aiming to expand the concept of authentication beyond a single login event. Instead of relying on passwords, codes or one-time biometric checks, Validit AI continuously analyzes usage patterns and behavior to detect anomalies and prevent unwanted actions at an early stage. For organizations deploying AI in production and operating autonomous systems that handle sensitive data and processes, this challenge becomes increasingly critical as autonomy grows. Presenting at CES highlights the show’s evolving role as a venue not only for consumer products, but also for foundational technologies of trust and security in the AI era, particularly those addressing the risks introduced by intelligent and autonomous systems.

Smart Sensum: Metamaterials as next-generation sensors

Smart Sensum is an Israeli deep-tech company focused on sensing and wireless communication, developing smart radar and antenna systems based on metamaterials and metasurfaces. At CES, the company will showcase its compact mmWave radar systems and programmable antennas, designed to deliver more accurate sensing and communication in smaller form factors and with lower power consumption. Smart Sensum’s approach addresses long-standing bottlenecks in sensing technologies, including size, complexity and cost, by replacing parts of traditional RF electronics with intelligently engineered electromagnetic structures. The solutions target applications such as robotics, drones, industrial IoT and mobility systems, where reliable sensing and communication are essential for autonomous operation. Its presence at CES reflects the show’s growing emphasis not only on AI software, but also on deep sensing and communication technologies that form the physical foundation of intelligent systems.

Motion Informatics: Neurological rehabilitation with AI and AR

While CES is often associated with consumer innovation, the exhibition has increasingly expanded into digital health, where AI-driven and data-centric technologies are reshaping clinical practice. In this context, Motion Informatics will present its neurological rehabilitation solutions, combining AI, biofeedback and augmented reality. The company develops platforms that analyze muscle activity in real time using EMG data and adapt electrical stimulation and training protocols to the patient’s condition, creating an interactive and personalized rehabilitation process. The system enables patients to perform guided exercises at home or under clinical supervision, while AI models optimize neurological recovery over time. Motion Informatics’ presence at CES reflects the growing demand in digital health for practical, outcome-driven solutions, positioning the company within a broader wave of cross-industry technologies that merge AI, advanced sensing and patient-centered experience.

temi: Robotics as a service, not a gimmick

temi is one of the most established Israeli companies in the service robotics space, and it comes to CES not to present a futuristic concept, but to demonstrate the next stage in the evolution of its robot as a mature, deployable product. This year, the company will present an updated version of the temi platform, focusing on expanded capabilities, greater modularity and deeper integration with enterprise systems and AI applications. Already deployed in hotels, senior living facilities, lobbies and service environments, the robot is positioned as a practical tool that performs defined tasks such as welcoming guests, guiding visitors, providing information and connecting to existing management systems. temi’s presentation at CES highlights a broader shift in robotics, from general-purpose machines that showcase basic capabilities to service platforms that deliver clear operational value in real-world environments, positioning the company as a mature player in a market that is only now beginning to scale.