By Yohai Schwiger

During its earnings call yesterday, following the release of its financial results, Mobileye CEO Amnon Shashua revealed that the company is developing a new intelligence layer for its autonomous driving systems. The goal, he explained, is to add a level of understanding above the system’s immediate decision-making layer — the one responsible for analyzing the environment, identifying open lanes, and computing trajectories in real time. Shashua described the shift as a fundamental change in how the system understands the driving environment, stressing that “autonomous driving is not just a geometric perception problem, but a decision-making problem in a social environment.”

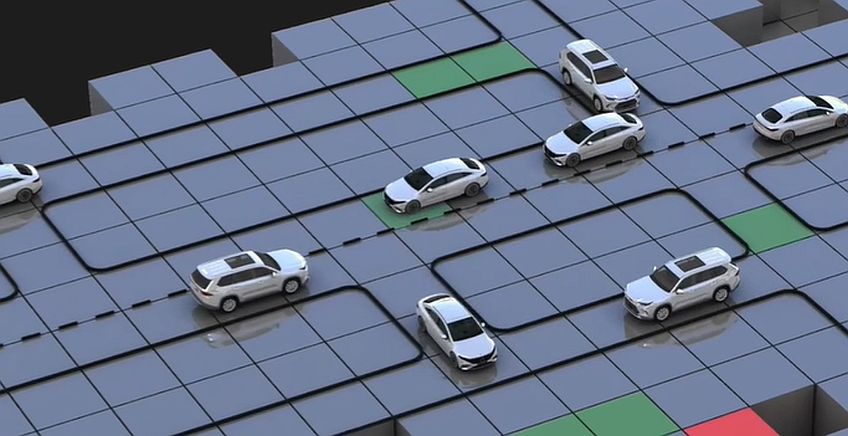

Shashua framed the road as a space populated by many independent agents, each making decisions that influence the behavior of others. Every driver, pedestrian, cyclist, or traffic actor is a participant in a single, interconnected system. A single action by an autonomous vehicle alters the behavior of the surrounding environment, and those reactions, in turn, feed back into the vehicle’s own decision-making. In such conditions, Shashua argued, understanding the road requires a broader perspective than simply identifying an open path or calculating an optimal trajectory.

Billions of Simulation Hours Overnight

Against this backdrop, Shashua introduced the concept of Artificial Community Intelligence, or ACI. According to him, the idea originates in academic research, but has never before been implemented at commercial scale in autonomous driving systems. “This is the first time an academic concept has been fully productized in an autonomous vehicle decision-making system,” he said. ACI is built on multi-agent reinforcement learning, aimed at understanding the dynamics of a community. The core idea is to train the system to understand how one decision affects the behavior of others, and how that chain of reactions ultimately feeds back into the vehicle itself.

This is a dimension familiar to every human driver, even if they are rarely conscious of it. A driver approaching a busy roundabout or a school pickup zone does not merely calculate available space or distance to the car ahead. Instead, they interpret the entire situation: children standing on the sidewalk, parents double-parked, impatient drivers nearby, and a general sense that the space is operating under different social rules. The decision to slow down, yield, or wait an extra moment is not derived from geometry alone, but from a deeper understanding of context and expectations.

To train such capabilities, Mobileye makes extensive use of simulation. Shashua explained that while visual perception can be trained using real-world data, planning and decision-making in a multi-agent environment require far larger volumes of data. “The sample complexity for planning is significantly higher,” he said, “because the actions you take influence the behavior of others.” The solution, according to Shashua, is large-scale simulation that allows the company to run massive numbers of scenarios. “We can reach a billion training hours overnight,” he said, highlighting the advantage of combining simulation with Mobileye’s global REM mapping infrastructure, which provides a realistic foundation for training.

Thinking Fast, Thinking Slow

The insights learned through simulation are divided by Mobileye into two cognitive layers: Fast Think and Slow Think. The Fast Think layer is responsible for real-time actions and manages the vehicle’s immediate safety. It operates at high frequencies — dozens of times per second — handling steering, braking, and lane keeping. This is a reflexive layer that runs directly on the vehicle’s hardware and cannot tolerate delays or uncertainty.

By contrast, the Slow Think layer focuses on understanding the situation in which the vehicle is operating. It does not ask only what is permitted or prohibited, but what is appropriate in a given context. Shashua described it as a layer designed to interpret the meaning of complex, non-routine scenes, rather than merely avoiding immediate hazards. For example, when a police officer blocks a lane and signals a vehicle to wait or change course, a safety system will know not to hit the officer. A contextual understanding layer, however, allows the vehicle to recognize that this is not a typical obstacle, but a human directive that requires a change in behavior.

The distinction between Fast Think and Slow Think closely echoes the fast-and-slow thinking model popularized by Nobel Prize-winning economist and psychologist Daniel Kahneman, who coined the terms to describe the difference between reflexive, automatic thinking and slower, interpretive reasoning. While Shashua did not mention Kahneman by name, the conceptual parallel is clear.

Architecturally, this translates into a clear separation between real-time systems and interpretive systems. Fast Think operates within tight control loops, while Slow Think runs at a lower cadence, providing context and high-level guidance. Its output is not a steering or braking command, but a shift in driving policy. It may cause the system to adopt more conservative behavior, avoid overtaking, or prefer yielding the right of way. The planner continues to compute trajectories in real time, but does so under a new set of priorities.

Shashua noted that because the Slow Think layer is not safety-critical and does not operate in real time, it is not bound by the same hardware constraints as the execution layer. In principle, such processing could even be performed using heavier compute resources, including the cloud, to analyze complex situations more deeply. He emphasized, however, that this does not involve moving driving decisions to the cloud, but rather expanding the system’s ability to understand situations that do not require immediate response.

Will This Extend to Mentee Robotics’ Humanoids?

The connection between ACI and the Slow Think layer is most evident during training. Simulation does not operate while the vehicle is driving, but serves as a laboratory in which the system learns social dynamics. In real time, the vehicle does not recompute millions of scenarios, but instead recognizes situations it has encountered before and applies intuition acquired through prior training. In this sense, simulation is where understanding is built, and Slow Think is where it is expressed.

The concepts Shashua outlined are not necessarily limited to the automotive domain. They may also be relevant to Mobileye’s recent expansion into humanoid robotics through its acquisition of Mentee Robotics. Like autonomous vehicles, humanoid robots operate in multi-agent environments, where humans and other machines react to one another in real time. Beyond motor control and balance, such robots must understand context, intent, and social norms. Mobileye has noted that Mentee’s robot is designed to learn by observing behavior around it, rather than relying solely on preprogrammed actions — an approach that aligns naturally with ideas such as ACI and the separation between immediate reaction and higher-level understanding.

Why Emphasize This in an Earnings Call?

This raises an obvious question: why did Shashua choose to devote so much time during an investor call to a deep technical topic focused on internal AI architecture, rather than on revenues, forecasts, or new contracts? The choice appears deliberate. On one level, it draws a clear line between Mobileye and approaches that frame autonomous driving as a problem solvable through technological shortcuts or brute-force increases in compute. Shashua sought to emphasize that the core challenge lies in understanding human interaction, not merely in object detection or trajectory planning. At the same time, the discussion serves as expectation management, explaining why truly advanced autonomous systems take time to develop and do not immediately translate into revenue. On a deeper level, it signals a broader strategy: Mobileye is not just building products or chips, but an intelligence layer for physical AI — one that could extend beyond the current generation of autonomous vehicles into adjacent domains such as robotics.

In that sense, the most technical segment of the earnings call was also the clearest business message. Not a promise for the next quarter, but an explanation of why the longer, more complex path is, in Mobileye’s view, the right one.