By Yohai Schwiger

Cognata, a Rehovot-based company best known for developing simulators for training and testing autonomous driving systems, has unveiled a surprising new product that marks a strategic pivot into the defense market—an arena entirely new for the company. Until recently, Cognata focused almost exclusively on commercial automotive customers. But at CES this month in Las Vegas, it introduced AVBox: a compact hardware-software kit that enables standard military vehicles, both light and heavy, to operate autonomously in off-road environments.

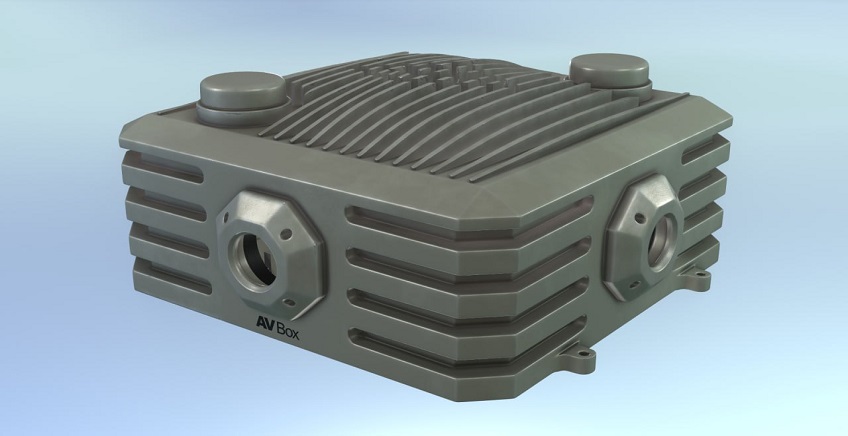

AVBox is a self-contained unit mounted on the vehicle’s roof, integrating a sensor suite, onboard computing, and vision- and navigation-focused algorithms. It is designed specifically for off-road scenarios—unstructured terrain without lanes, traffic signals, or civilian infrastructure—and is built around predefined, mission-oriented autonomy. Rather than full autonomy, the system supports limited operational autonomy: independently navigating a vehicle over several kilometers, primarily for logistical and operational tasks, even in the absence of GPS, digital maps, or continuous communication with a remote operator.

Bridging Remote Control and Autonomy

This is not a prototype but a finished commercial product. AVBox is already undergoing advanced evaluation by a potential customer, following pilot trials in which it was installed on operational armored vehicles to convert them for remote or unmanned operation—particularly relevant for dangerous or logistics-heavy missions. Cognata’s solution effectively bridges the gap between remote control and autonomy. While many military vehicles today rely on remote operation, loss of communication—due to terrain, interference, or distance—often renders them immobile.

In such cases, AVBox can assume control and autonomously complete the mission based on predefined objectives, ensuring operational continuity without requiring full, always-on autonomy. Similar concepts are already being applied in Ukraine’s drone warfare. Designed for easy integration into existing platforms, AVBox enables rapid upgrades of current fleets without lengthy and costly development or procurement cycles. Cognata is actively marketing the system in Europe and the United States, which is why it chose to debut AVBox at CES.

AVBox is offered in three sensing configurations. The base “Scout” version relies on daytime cameras. A more advanced variant adds thermal cameras for night operations, while the most sophisticated configuration incorporates LiDAR to handle complex terrain. The system’s modular design allows customers to tailor it to mission requirements, terrain conditions, and budget constraints, with an emphasis on broad deployment rather than limited, high-cost use.

A Military Product Born from a Civilian Demo

The transition to an operational autonomous system emerged from two parallel developments in recent years. On one hand, Cognata’s simulator expanded into off-road driving domains, including robotic training and open-terrain simulation. Initially, customers for these capabilities came primarily from civilian sectors such as agriculture and mining, but over the past two years, the company has also begun supplying them to the Israeli military. This exposure highlighted real-world defense needs and the gap between available technology and operational requirements.

Shay Rotman, Cognata’s Vice President of Business Development and Marketing, told Techtime that some automotive OEMs were skeptical about the reliability of simulation and synthetic data for training computer-vision systems. “To prove that the technology works,” Rotman said, “we developed a computer-vision stack in 2023 that included sensors, processors, and algorithms. Initially, it was meant as a technological demo to show potential customers how advanced vision systems could be built using purely synthetic data.”

As part of that effort, Cognata developed a monocular depth technology capable of estimating distances and 3D structure from a single image. The system was trained on hundreds of thousands of images generated entirely in simulation. “It started out as a response to our own pain point and a way to prove the value of our simulation,” Rotman said. “At some point, we realized we had the foundation for a completely new type of system.”

According to Rotman, the decision to focus on limited, mission-oriented autonomy rather than full autonomy was also shaped by lessons learned from the automotive sector. “Civilian automotive aims for full autonomy, but that’s still far off and requires massive resources. In the military—especially in off-road driving—you can solve real problems today with task-focused autonomy.” That philosophy also influenced pricing. “In many militaries, there’s a gap between development and procurement because advanced systems are so expensive,” he said. “We wanted to offer something affordable enough for wide deployment—not a system that remains stuck in the experimental phase.”

A Growing Market Offsetting a Stalled One

AVBox emerges against the backdrop of a challenging reality in Cognata’s original market. Global investment in automotive technology has declined steadily in recent years, while major carmakers—particularly in Europe and the United States—face mounting business pressures. “Auto-tech today is a tough market,” Rotman said. “Many manufacturers are dealing with competitive and financial challenges and are investing less in autonomous driving development.”

As a result, Cognata significantly reduced its workforce over the past two years, adapting to delayed projects, slower investment cycles, and the prolonged path to commercialization in civilian automotive. “It’s a heavily regulated market with long development cycles and very high costs,” Rotman noted. “Not every company can keep moving at the same pace as before.”

With AVBox, Cognata is now positioning itself as a company operating in two parallel domains: simulation, where it continues to support the development and training of autonomous systems, and operational autonomy tailored to clearly defined defense needs. “We’ve moved from being a pure simulation provider to a company with two products,” Rotman concluded. “And in defense, there is openness, demand, and a genuine opportunity today.”