By: Nikhil Amin and Harsha Vardhan, Verification Group, Synopsys

As chip design sizes increase, so does the total power consumption driving its operations. To meet with the increasing intelligence and power-management flow required by modern applications, system on chip (SoC) designers and verification engineers need comprehensive solutions that leverage low-power design techniques to enable fine-grained power management. Over the years, the Unified Power Format (UPF) standard, intended for specifying and verifying power intent of integrated circuit (IC) designs, has advanced and created a wide range of opportunities.

However, for low-power cells like hard macro, RAM cell, or PAD, the connectivity of low-power control signals remains ambiguous. In this article, which was originally published on the “From Silicon to Software” blog, you’ll learn about the basics of UPF, its importance in the power landscape, how to expand low-power signoff with custom mechanisms, and how to take power-managed designs to the next level.

The History of Unified Power Format (UPF)

As development teams prioritized energy efficiency and adopted low-power approaches, they found difficulties in the specification, implementation, and verification of power management structures. Prior to today’s era of standardization and automation, they didn’t have many resources to solve design problems. The nonprofit organization Accellera Systems Initiative launched UPF for the EDA industry to enable low-power design and verification.

The organization presented it to the Institute of Electrical and Electronics Engineers (IEEE), which published the UPF standard in 2007. Since IEEE’s introduction of the standard, UPF has served as a North Star for chip designers tackling low-power, energy-efficient electronic systems and SoCs. Over the last nine years, new iterations of UPF have been published to advance alongside semiconductor technology enhancements.

Using UPF in Designs

UPF outlines design power intent, specifying control signals, routing, block configuration, and more. Its backbone is the scripting language — Tool Control Language (TCL) — which enables automation for design software, providing specific recommendations to meet low-power standards. On average, development teams report more than a dozen significant challenges related to implementation, specification, and verification of structures.

With UPF, the ability to determine the intended design operation in terms of power management has proven to be effective in overcoming these challenges. Successful implementation of low-power semiconductor designs includes checking UPF descriptions and verifying UPF against the design at multiple stages in the project. Typically, low-power design involves standard control signals such as:

- Isolation enables

- Clocks, resets

- Save, restore, and retain

- Power switch enables, acknowledgement

UPF designs the standard specifications for these control signals that are distributed traditionally through a power management unit. The most common issues that design teams encounter with low-power signals include complex logic connectivity, incorrect buffers, retimed flops for high fanout net handling, blocked control paths, and swapped connections. A UPF file specifies several key attributes for a low-power design, including:

- Power supplies: supply nets, supply sets, power states

- Power control: power switches

- Level shifters and isolation cells

- Memory retention strategies and supply set power states

- Power states and transitions

- Power/ground pin type

As SoC designs evolve and include more logic functions to meet advanced requirements, the use of complex macros and memories are becoming more common. These cells can have their own low-power modes and functionality which adds unique complexities to the design flow, since the primitive connectivity specifications within UPF are insufficient to meet the verification requirements and architectural-level specifications.

Expanding Low-Power Signoff: UPF and Beyond

Typically, once the initial steps for low-power design are conducted — selection of low-power components, system simulations, UPF, and register transfer level (RTL) coding — designers move to the verification phase, which requires a comprehensive toolkit with several capabilities. The initial step is static power verification and exploration, ensuring the inputs to the design flow (RTL, UPF, and SDC) are structurally and syntactically correct.

Designers need to conduct Lint and CDC checks to make sure the RTL is clean. UPF and SDC checks can be then conducted concurrently with the RTL checks — but a tool that can run these checks and perform power analysis to ensure the design functions properly is key. Software-driven power analysis comes next. For emulation-based low power flows, it is important for chip designers to ensure that peak windows for the design’s power profile are used and leveraged to generate waveforms that estimate power.

The power implementation phase includes several steps for power estimation, logic synthesis, and generating a netlist. Once the checks are complete, the final physical components are placed and routed. During the final step – signoff – designers must ensure that the connections and changes made to the netlist and UPF are consistent and clean, and the power intent is preserved.

Over the years, UPF has grown by incorporating several advanced capabilities. These range from power-intent specification process simplification to power-management flow alignment requirements of IP-based SoC designs. Verification of low power control signals by leveraging control signal connectivity of typical low-power cells such as isolation, retention, and coarse grain power switch within UPF.

Open Issues in UPF

However, for certain low-power cells, such as hard RAM and hard macro, the connectivity of low-power control signals is unclear. This makes verification a complex and manual process often leading to costly bug escapes. Simulation can identify some of these issues but is contingent on a robust simulation environment and corresponding debug capabilities. It also occurs very late in the verification cycle increasing the cost of the verification.

Cases where UPF does not have a way to define specification:

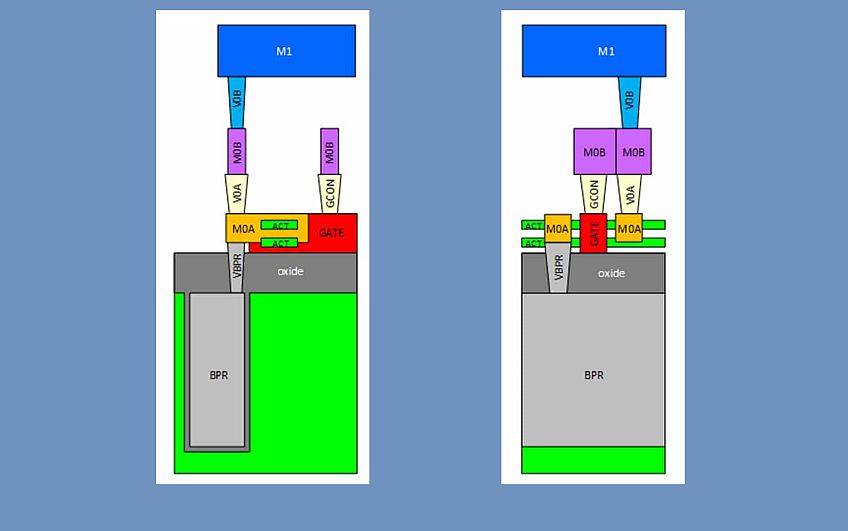

- While UPF is extensive, the control signal connectivity for low-power cells such as RAM and hard macros remains undefined. Chips are often designed with several RAM cells, and their architecture within the chip is critical to define memory controls and enable low-power optimization features such as sleep and retention enablers. During the design process, engineers frequently rely on simulation to find connectivity issues and other power-related bugs. However, simulations take days and are typically time-intensive procedures.

- Hard macros present a similar problem. They are often several blocks built into the chip’s design and internally isolated. UPF doesn’t provide checks for internal isolation control or polarity for internally isolated pins.

- In addition, it is also important to verify an IP-level control signal’s connectivity to the correct SoC signal when the IP is integrated into the SoC to ensure accurate verification at the SoC level. Currently, UPF does not have a mechanism to define the specification for this connectivity.

- Power state table (PST) dependent isolation enable checks is another area where the Low Power Architect usually defines how the isolation enable signal and its sense are related to a supply. If isolation enable becomes active or inactive in the wrong power state table, then it can propagate corruption or clamp value towards power-on logic and that may not be intended in a PST.

To support the increasing demands of advanced power management from many of today’s electronic products, it is critical to have a comprehensive low-power verification tool that validates the final design functions accurately and can accomplish all of the phases for UPF and RTL checks, power analysis, and signoff. For more information on UPF and pre-empting low-power issues, watch this webinar.

Tackling the Power Monster with UPF Checks Throughout the Design Process

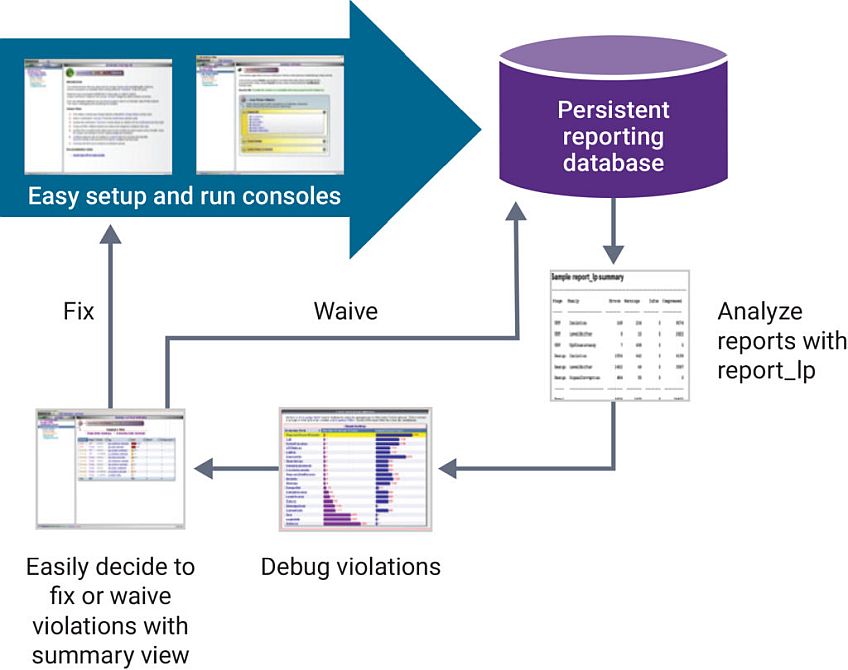

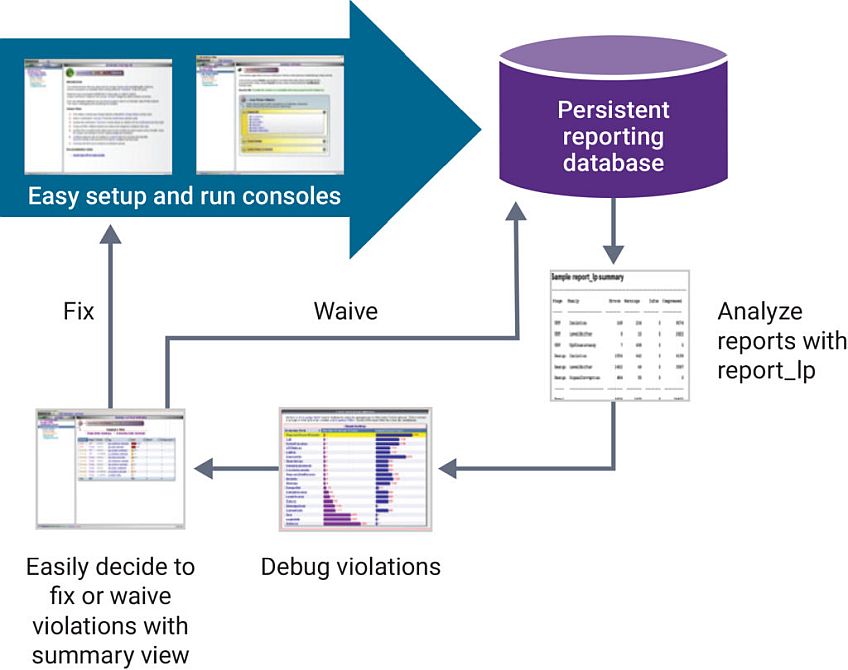

Given the nature of low-power design architectures and behavior, verification and signoff for low-power designs have become more challenging compared to always-on designs. As you’re evaluating verification technologies, consider solutions that are capable of extensive, low-noise reporting, filtering, and waiving to help simplify and also expedite complex, low-power verification signoff flows. Equipped to fully analyze chip performance and capabilities, you can be in a solid position to find and solve low-power bugs faster.

To address the RAM cells, a solution that allows you to quickly conduct full connectivity checks and identify potential problems can mitigate resource costs. As for the hard macros, a solution that allows you to specify control and polarity for these internal components can address the associated challenges.

The Synopsys VC LP™ static low-power verification solution enables all UPF checks, such as scans for power intent consistency, architecture at RTL, structural and power and ground (PG), and functionality. VC LP is a multi-voltage low-power static rule checker that allows developers to validate UPF low-power design intent quickly and accurately. It also features hierarchical power state analysis, power state table debug, and Synopsys Verdi® debug. VC LP also provides solutions for hard macro and RAM cells that UPF doesn’t include.

The platform includes over 650 checks — covering electrical and architectural evaluations — and offers full-chip performance and capacity for comprehensive signoff. It allows users to conduct checks at every step of the chip design process from register-transfer-level (RTL) to post-synthesis and post-place-and-route PG netlist. VC LP offers predictive checks for designers to identify potential implementation problems, allowing for discovery and remediation earlier in the process, as well as providing support for multiple hierarchal flows, such as Black Box and Extracted Timing Models (ETM).

As low-power design continues to become an increasingly important priority and more devices connect to the internet, chip developers will have to keep pace with demand. Design teams will need to prioritize low-power designs and employ advanced power management techniques to operate across all power states going forward.